In a paper by Noah Zych, Principal Engineer – Unmanned Systems, Oshkosh Corp., entitled United States Marine Corps/Robotics Technology Consortium Cargo Unmanned Ground Vehicle, he states “The increasing level of technical functionality demonstrated by progressive unmanned ground vehicles (UGVs) has liberated them from the highly constrained environments of research laboratories and rigidly structured scenarios, and has transformed consideration of their use in military operational environments from hypothetical to imperative. In less than ten years, prototype full-size UGVs have advanced from faltering expeditions across only a few miles of static terrain to high speed trips in complex freeway traffic.” (Search 10685103 at www.oemoffhighway.com for the full paper.) The Marines are considering autonomous vehicles for a variety of jobs, from logistics convoys to route clearance.

Oshkosh Corp., Oshkosh, WI, has been working on its unmanned vehicle system platform for years, starting with its vehicle submission for the 2004 DARPA (Defense Advanced Research Projects Agency) Challenge. Oshkosh’s robotics efforts began in response to the 2001 U.S. Armed Forces announcement of its goal to have one-third of all operational ground combat vehicles unmanned by 2015. Oshkosh is working together with the National Robotics Engineering Center (NREC) of Carnegie Melon University to develop its robotic technologies, and has been since their 2010 announcement of the joint partnership for the perception system.

The company’s TerraMax UGV Medium Tactical Vehicle Replacement (MTVR) was presented in Washington D.C. at the Assn. for Unmanned Vehicle Systems International Unmanned Systems 2011 back in August. The technology had recently completed its first limited technical assessment (LTA) for the U.S. Marine Corps Cargo UGV (CUGV) initiative (see more on Oshkosh’s many LTAs in the sidebar on page XX).

The Cargo UGV program is sponsored by the Marine Corps Warfighting Laboratory and the Joint Ground Robotics Enterprise’s Robotics Technology Consortium. The project augments the MK25 Standard Cargo Truck variant of the Oshkosh Defense MTVR. The CUGV platform parameters to meet were that the vehicle be instantly operational in either manned or unmanned mode; capable of providing full off-road mobility and payload capacity; able to operate—day or night—in urban, rural and primitive surroundings; and have minimal impact on visual signature or reliability of the host vehicle.

The Concept of Operations—or CONOPS—for the Cargo UGV was to carry out a convoy mission in a tactical environment with live forces using both manned and unmanned vehicles. A variety of operating conditions were to be presented relevant to the terrain in order to reduce Marine exposure to lethal attacks. The Command and Control Vehicle (C2V) Concept of Operations was to be a remote operator control unit so as to allow its personnel to plan routes and initiate missions, maintain situational awareness from beyond their line of sight, supervise and modify a vehicle’s behavior, review mission data and, of course, preserve the operator and occupants’ safety.

A CUGV can be run in several different modes—fully autonomous as a convoy leader or in shadow mode as a follower to a lead vehicle. In mixed convoys with several vehicles present, the CUGV can be placed at any point in the convoy and maintain a safe following distance. Even tele-operation is an option to allow the operator to run a vehicle using a ruggedized game-style controller for throttling and steering. Just as in a video game, the operator can choose his or her viewing perspective, selecting between camera views on the front, rear, left or right of the vehicle, as well as aerial map views or in-the-cab perspectives.

The C2V operator control unit (OCU) is programmed to have the most minimal visual impact on the operator as possible, displaying only immediately necessary information. The input to the system should be accepted quickly in order to maintain convoy tempo and require infrequent operator intervention. The goal is to allow a single operator to monitor and control multiple vehicles at one time from one display.

The importance of hidden hardware

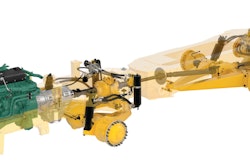

In order to make the vehicle fully autonomous, several physical and software based technologies are needed to work in unison to gather the appropriate information and make informed decisions based on a the latest in machine learning techniques. The TerraMax technology is a modular system that can be integrated into any military vehicle. Auxiliary system actuation (x-by-wire) includes a central tire inflation system, driveline locks, anti-lock braking, engine braking, and steering via the CAN J1939 protocol.

The hardware features a suite of sensors including high definition LIDAR (LIght Detection And Ranging), one wide dynamic range camera, one short wave infrared camera, four situational awareness cameras, 12 short range radar systems and three long range radar systems. The short-range radars provide 360-degree close-proximity obstacle detection and avoidance making it suitable for convoy work in rugged conditions. Radio signals are the chosen data signal transfer mode for safety purposes. “Low latency datalinks are necessary for direct operator control of a ground robot. Higher latency communications, such as satellite links, provide long distance capability and may be used for global mission planning from higher echelons for example,” says John Beck, Chief Engineer – Unmanned Systems, Oshkosh Corp.

Military grade GNSS (global navigation satellite system) is employed, though the vehicle’s map registration software technology allows for the system to run without a satellite signal for instances where a GPS is blocked or unavailable, minimizing operational risk. All of this additional hardware is located on the vehicle in places where they will not hinder the combat needs of soldiers, nor compromise the vehicle’s utility. “We want minimum visual signature,” Beck says. “It’s important to hide sensors in the truck bodywork so it doesn’t look different from the vehicles without the autonomous system.”

In another excerpt from Noah Zynch’s paper, he confirms Beck’s statement, “…having unmanned assets that, at a range, are indistinguishable from manned vehicles enhances the survivability gains the UGVs present; if an adversary is unable to detect which vehicles in a convoy carry personnel, the risk of a casualty due to an ambush or remote control improvised explosive device [IED] detonation can be reduced.”

The hardware is fully integrated into Oshkosh’s on-board diagnostic system, Command Zone, to detect any problems or faults during vehicle operation.

Not-so-soft software

Several key software modules are utilized to paint a complete picture of the terrain, location and potential obstacles or threats. The data gathered from the Ground Surface Estimation software allows the UGV to determine where it should and should not drive based on slope and roughness. The software eliminates positive obstacles (meaning above ground elements such as bushes, trees, etc.) and reports back where the actual ground surface is. The slope and roughness calculations help the vehicle to determine an appropriate vehicle speed, as well as what areas to avoid.

Terrain Classification software takes the ground surface one step further and adds a three-dimensional (3D) element to allow the vehicle to drive in areas where vegetation growth encroaches on the path either from the sides or interspersed in the roadway while still distinguishing small obstacles over which the vehicle should not drive. The system is able to judge elevation, geometric obstacles and classify vegetation. This way, the vehicle is not stopping every time it comes across a shrub. It learns as it builds up its 3D terrain library, allowing for a smoother ride and fewer awkward stops.

“The goal is to have this vehicle function like it has a human driver. What we don’t want is for it to act erratically and allow someone to pick out which vehicles are driverless and target soldier-containing vehicles,” Beck states. “The motion planner provides exceptional performance around corners and at higher speeds, and is able to capitalize on the perception system to enable mobility in rough terrain and challenging obstacle environments.”

The perception information is brought into a cost map that the vehicle then uses. “So you have High Cost or Lethal Cost for example: Don’t drive off this cliff or into this tree. Similar with the learning technique of our perception system (what vegetation is, where the road is, what is ground, rocks, dust, etc.) we’re applying machine learning to the motion planner where we can manually drive a particular route and the vehicle will learn the right way to “Cost” the trajectories, in other words what is a good way to drive that route.

“Rather than trying to program human intelligence, why not let the machine learn itself?” By taking data logs and teaching the motion planner which is the best way to drive a corner, Oshkosh is able to get better performance out of the system.

The Terrain Classification system is also helpful in dusty environments where traditional LADAR-based perception systems return large amounts of false positives.

The LIDAR and Radar Moving Obstacle Detection systems enable tracking of dynamic objects to determine if there is a threat, maintain safe following distances of leader vehicles and predict the vehicle’s path. A green line off the front of the vehicle on an operator’s screen shows the LIDAR’s calculated estimation of where the moving object being tracked will be in the next second. The moving obstacle detection and tracking needs to be handled carefully by the motion planning software for optimal performance, confirms Beck. “You want to be able to keep a safe following distance for safety reasons. Consider the case where you’re cresting a hill and lose track of the vehicle in front; the motion planner needs to understand that there is a possibility that the preceding vehicle has stopped on the other side of the hill, and to proceed with caution,” he says.

The radar system is very good at detecting the velocities of moving obstacles, and the LIDAR provides excellent range information. “We’re currently working on improved fusion of the two sensor modalities,” says Beck. “We’ve had some level of fusion, but the performance is getting better and we’re also training the classifier with the radar information.”

On the display, the operator control unit allows the screen to show overhead imagery, set up missions (automated routes and driving parameters) and to give the system feedback if it comes across an obstacle to acknowledge if it may continue or if the course needs to be altered accordingly. “We can set different zones for specific terrains. A Go Zone is an area with no obstacles where the vehicle can drive freely at a set rate of speed. There are Slow Zones, and then there are different areas of operation including urban, rural and primitive,” explains Beck. “If we know a particular area is a Primitive Zone, we can set the machine at a maximum speed limit, lock up all of its axles and set its tire pressure system to mud and snow conditions, for example.”

Zones can be created or changed on the fly, and operating parameters can be altered with operator intervention, or it can be completely hands off and the vehicle will just drive itself with the preapproved program parameters. If the vehicle is in a zone with a maximum speed of 50 mph, as it approaches a zone with a different speed maximum, it will begin to slow down or speed up accordingly to allow the optimal machine operation transition.

Challenges and potential

“For LIDAR, water can be an issue because it uses light and when light hits a surface, the return is what paints the accurate picture of the world,” explains Beck. “But with water, sometimes it is reflective, so the light hits the water and shoots off into space, making a puddle look like a huge hole, or it can bounce off the water and hit an obstacle and make an obstacle seem further away.”

Oshkosh is working to come up with a reflection filter to better understand when there is water present. “LIDAR is incapable of detecting water depths however, we just understand that there is a surface there and it’s not a deep hole. If the vehicle classifies something as a water crossing, it will stop and ask the operator what to do and how to proceed,” says Beck.

The UGV has been accepted from military personnel with great enthusiasm. Initial test Marines were adamant after a few days of training that given a few more days they would be comfortable controlling up to three to five vehicles at a time.

In June, the second Cargo UGV (CUGV2) will be subjected to a third LTA, this time with multiple vehicle scenarios. The capstone event is a Limited Objective Experiment where Marines will be trained on the system for use in simulated logistics operations faced with multiple scenarios seen in actual combat situations.

Future applications for the TerraMax UGV system include construction, fire fighting and potentially snow removal applications where operator safety is of particular concern.

**To read Noah Zych’s paper, United States Marine Corps/Robotics Technology Consortium Cargo Unmanned Ground Vehicle in full, including an insight into the preliminary results from Oshkosh’s first Limited Technical Assessment for the Cargo UGV, go online to www.oemoffhighway.com and search ID 10685103.

![Hd Hyundai Xite Transformation Booth Image[1]](https://img.oemoffhighway.com/files/base/acbm/ooh/image/2023/12/HD_Hyundai_Xite_Transformation_Booth_Image_1_.657a32d4218f2.png?ar=16%3A9&auto=format%2Ccompress&fit=crop&h=135&q=70&rect=113%2C0%2C1600%2C900&w=240)